I previously wrote about converting an individual puppet module’s repo to use the Puppet Development Kit. We can also convert controlrepos to use the PDK. I am starting with a “traditional” controlrepo, described here, as well as centralized tests, described here. To follow this article directly, you need to:

- Have all hiera data and role/profile/custom modules in the /dist directory

- Have all tests, for all modules, in the /spec directory

If your controlrepo looks different, this article can be used to migrate to the PDK, but you will have to modify some of the sections a bit.

This will be a very lengthy blog post (over 4,000 words!) and covers a very time-consuming process. It took me about 2 full days to work through this on my own controlrepo. Hopefully, this article helps you shave significant time off the effort, but don’t expect a very quick change.

Managing the PDK itself

First, let’s make sure we have a profile to install and manage the PDK. As we use the role/profile pattern, we create a class profile::pdk with the parameter version, which we can specify in hiera as profile::pdk::version: ‘1.7.1’ (current version as of this writing). This profile can then be added to an appropriate role class, like role::build for a build server, or applied directly to your laptop. I use only Enterprise Linux 7, but we could certainly flush this out to support multiple OSes:

# dist/profile/manifests/pdk.pp

class profile::pdk (

String $version = 'present',

) {

package {'puppet6-release':

ensure => present,

source => "https://yum.puppet.com/puppet6/puppet6-release-el-7.noarch.rpm",

}

package {'pdk':

ensure => $version,

require => Package['puppet6-release'],

}

}

# spec/classes/profile/pdk_spec.rb

require 'spec_helper'

describe 'profile::pdk' do

on_supported_os.each do |os, facts|

next unless facts[:kernel] == 'Linux'

context "on #{os}" do

let (:facts) {

facts.merge({

:clientcert => 'build',

})

}

it { is_expected.to compile.with_all_deps }

it { is_expected.to contain_package('puppet6-release') }

it { is_expected.to contain_package('pdk') }

end

end

end

Once this change is pushed upstream and the build server (or other target node) checks in, the PDK is available:

$ pdk --version

1.7.1

Now we are almost ready to go. Of course, we need to start with good, working tests! If any tests are currently failing, we need to get them to a passing state before continuing, like this:

Finished in 3 minutes 12.2 seconds (files took 1 minute 17.89 seconds to load)

782 examples, 0 failures

With everything in a known good state, we can then be sure that any failures are related to the PDK changes, and only the PDK changes.

Setting up the PDK Template

The PDK comes with a set of pre-packaged templates. It is recommended to stick with a set of templates designed for the current PDK version for stability. However, the templates are online and may updated without an accompanying PDK release. We may choose to stick with the on-disk templates, we may point to the online templates from Puppet, or we may create our own! For those working with the the on-disk templates, you can skip down to working with .sync.yml

To another template, we use the pdk convert --template-url. If this is our own template, we should make sure the latest commit is compliant with the PDK version we are using. If we point to Puppet’s templates, we essentially shift to the development track. Make sure you understand this before changing the templates. We can get back to using the on-disk template with the url file:///opt/puppetlabs/pdk/share/cache/pdk-templates.git, though, so this isn’t a decision we have to live with forever. Here’s the command to switch to the official Puppet templates:

$ pdk convert --template-url=https://github.com/puppetlabs/pdk-templates

------------Files to be added-----------

.travis.yml

.gitlab-ci.yml

.yardopts

appveyor.yml

.rubocop.yml

.pdkignore

----------Files to be modified----------

metadata.json

Gemfile

Rakefile

spec/spec_helper.rb

spec/default_facts.yml

.gitignore

.rspec

----------------------------------------

You can find a report of differences in convert_report.txt.

pdk (INFO): Module conversion is a potentially destructive action. Ensure that you have committed your module to a version control system or have a backup, and review the changes above before continuing.

Do you want to continue and make these changes to your module? Yes

------------Convert completed-----------

6 files added, 7 files modified.

Now, everyone’s setup is probably a little different and thus we cannot predict the entirety of the changes each of us must make, but there are some minimal changes everyone must make. The file .sync.yml can be created to allow each of us to override the template defaults without having to write our own templates. The layout of the YAML starts with the filename the changes will modify, followed by the appropriate config section and then the value(s) for that section. We can find the appropriate config section by looking at the template repo’s erb templates. For instance, I do not use AppVeyor, GitLab, or Travis with this controlrepo, so to have git ignore them, I made the following changes to the .gitignore‘s required hash:

$ cat .sync.yml

---

.gitignore:

required:

- 'appveyor.yml'

- '.gitlab-ci.yml'

- '.travis.yml'

When changes are made to the sync file, they must be applied with the pdk update command. We can see that originally, these unused files were to be committed, but now they are properly ignored:

$ git status

# On branch pdk

# Changes not staged for commit:

# (use "git add <file>..." to update what will be committed)

# (use "git checkout -- <file>..." to discard changes in working directory)

#

# modified: .gitignore

# modified: .rspec

# modified: Gemfile

# modified: Rakefile

# modified: metadata.json

# modified: spec/default_facts.yml

# modified: spec/spec_helper.rb

#

# Untracked files:

# (use "git add <file>..." to include in what will be committed)

#

# .gitlab-ci.yml

# .pdkignore

# .rubocop.yml

# .sync.yml

# .travis.yml

# .yardopts

# appveyor.yml

no changes added to commit (use "git add" and/or "git commit -a")

$ cat .sync.yml

---

.gitignore:

required:

- 'appveyor.yml'

- '.gitlab-ci.yml'

- '.travis.yml'

$ pdk update

pdk (INFO): Updating mss-controlrepo using the template at https://github.com/puppetlabs/pdk-templates, from 1.7.1 to 1.7.1

----------Files to be modified----------

.gitignore

----------------------------------------

You can find a report of differences in update_report.txt.

Do you want to continue and make these changes to your module? Yes

------------Update completed------------

1 files modified.

$ git status

# On branch pdk

# Changes not staged for commit:

# (use "git add <file>..." to update what will be committed)

# (use "git checkout -- <file>..." to discard changes in working directory)

#

# modified: .gitignore

# modified: .rspec

# modified: Gemfile

# modified: Rakefile

# modified: metadata.json

# modified: spec/default_facts.yml

# modified: spec/spec_helper.rb

#

# Untracked files:

# (use "git add <file>..." to include in what will be committed)

#

# .pdkignore

# .rubocop.yml

# .sync.yml

# .yardopts

no changes added to commit (use "git add" and/or "git commit -a")

Anytime we pdk update, we will still receive new versions of the ignored files, but they won’t be committed to the repo and a git clean or a clean checkout will remove them.

After initial publication, I was made aware that you can completely delete or unmanage a file using delete:true or unmanage:true, as described here, rather than using .gitignore.

We may need to implement other overrides, except that we do not know what they would be yet, so let’s commit our changes so far. Then we can start working on validation or unit tests. It doesn’t really matter which we choose to work on first, though my preference is validation first as it does not depend on the version of Puppet we are testing.

PDK Validate

The PDK validation check, pdk validate, will check the syntax and style of metadata.json and any task json files, syntax and style of all puppet files, and ruby code style. This is roughly equivalent to our old bundle exec rake syntax task. Since the bundle setup is a wee bit old and the PDK is kept up to date, we shouldn’t be surprised if what was passing before now has failures. Here’s a sample of the errors I encountered on my first run – there were hundreds of them:

$ pdk validate

pdk (INFO): Running all available validators...

pdk (INFO): Using Ruby 2.5.1

pdk (INFO): Using Puppet 6.0.2

[✔] Checking metadata syntax (metadata.json tasks/*.json).

[✔] Checking module metadata style (metadata.json).

[✔] Checking Puppet manifest syntax (**/**.pp).

[✔] Checking Puppet manifest style (**/*.pp).

[✖] Checking Ruby code style (**/**.rb).

info: task-metadata-lint: ./: Target does not contain any files to validate (tasks/*.json).

warning: puppet-lint: dist/eyaml/manifests/init.pp:43:12: indentation of => is not properly aligned (expected in column 14, but found it in column 12)

warning: puppet-lint: dist/eyaml/manifests/init.pp:51:11: indentation of => is not properly aligned (expected in column 12, but found it in column 11)

warning: puppet-lint: dist/msswiki/manifests/init.pp:56:12: indentation of => is not properly aligned (expected in column 13, but found it in column 12)

warning: puppet-lint: dist/msswiki/manifests/init.pp:57:10: indentation of => is not properly aligned (expected in column 13, but found it in column 10)

warning: puppet-lint: dist/msswiki/manifests/init.pp:58:11: indentation of => is not properly aligned (expected in column 13, but found it in column 11)

warning: puppet-lint: dist/msswiki/manifests/init.pp:59:10: indentation of => is not properly aligned (expected in column 13, but found it in column 10)

warning: puppet-lint: dist/msswiki/manifests/init.pp:60:12: indentation of => is not properly aligned (expected in column 13, but found it in column 12)

warning: puppet-lint: dist/msswiki/manifests/rsync.pp:37:140: line has more than 140 characters

warning: puppet-lint: dist/msswiki/manifests/rsync.pp:43:140: line has more than 140 characters

warning: puppet-lint: dist/profile/manifests/access_request.pp:21:3: optional parameter listed before required parameter

warning: puppet-lint: dist/profile/manifests/access_request.pp:22:3: optional parameter listed before required parameter

We can control puppet-lint Rake settings in .sync.yml – but it only works for rake tasks. pdk validate will ignore it because puppet-lint isn’t invoked via rake. The same settings need to be put in .puppet-lint.rc in the proper format. That file is not populated via pdk, so just create it by hand. I don’t care about the arrow alignment or 140 characters checks, so I’ve added the appropriate lines to both files and re-run pdk update. We all have difference preferences, just make sure they are reflected in both locations:

$ cat .sync.yml

---

.gitignore:

required:

- 'appveyor.yml'

- '.gitlab-ci.yml'

- '.travis.yml'

Rakefile:

default_disabled_lint_checks:

- '140chars'

- 'arrow_alignment'

$ cat .puppet-lint.rc

--no-arrow_alignment-check

--no-140chars-check

$ grep disable Rakefile

PuppetLint.configuration.send('disable_relative')

PuppetLint.configuration.send('disable_140chars')

PuppetLint.configuration.send('disable_arrow_alignment')

Now we can use pdk validate and see a lot fewer violations. We can try to automatically correct the remaining violations with pdk validate -a, which will also try to auto-fix other syntax violations, or pdk bundle exec rake lint_fix, which restricts fixes to just puppet-lint. Not all violations can be auto-corrected, so some may still need fixed manually. I also found I had a .rubocop.yml in a custom module’s directory causing rubocop failures, because apparently rubocop parses EVERY config file it finds no matter where it’s located, and had to remove it to prevent errors. It may take you numerous tries to get through this. I recommend fixing a few things and committing before moving on to the next set of violations, so that you can find your way back if you make mistakes. Here’s a command that can help you edit all the files that can’t be autofixed by puppet-lint or rubocop (assuming you’ve already completed an autofix attempt):

vi $(pdk validate | egrep "(puppet-lint|rubocop)" | awk '{print $3}' | awk -F: '{print $1}' | sort | uniq | xargs)

Alternatively, you can disable rubocop entirely if you want by adding the following to your .sync.yml. If you are only writing spec tests, this is probably fine, but if you are writing facts, types, and providers, I do not suggest it.

.rubocop.yml:

selected_profile: off

We have quite a few methods to fix all the possible errors that come our way. Once we have fixed everything, we can move on to the Unit Tests. We will re-run validation again after the unit tests, to ensure any changes we make for unit tests do not introduce new violations.

Unit Tests

Previously, we used bundle exec rake spec to run unit tests. The PDK way is pdk test unit. It performs pretty much the same, but it does collect all the output before displaying it, so if you have lots of fixtures and tests, you won’t see any output for a good long while and then bam, you get it all at once. The results will probably be just a tad overwhelming at first:

$ pdk test unit

pdk (INFO): Using Ruby 2.5.1

pdk (INFO): Using Puppet 6.0.2

[✔] Preparing to run the unit tests.

[✖] Running unit tests.

Evaluated 782 tests in 110.76110479 seconds: 700 failures, 0 pending.

failed: rspec: ./spec/classes/profile/base__junos_spec.rb:11: Evaluation Error: Error while evaluating a Resource Statement, Unknown resource type: 'cron' (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/profile/manifests/base/junos.pp, line: 15, column: 3) on node build

profile::base::junos with defaults for all parameters should contain Cron[puppetrun]

Failure/Error:

context 'with defaults for all parameters' do

it { is_expected.to create_class('profile::base::junos') }

it { is_expected.to create_cron('puppetrun') }

end

end

failed: rspec: ./spec/classes/profile/base__linux_spec.rb:12: Evaluation Error: Error while evaluating a Resource Statement, Unknown resource type: 'sshkey' (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/ssh/manifests/hostkeys.pp, line: 13, column: 5) on node build

profile::base::linux on redhat-6-x86_64 disable openshift selinux policy should contain Selmodule[openshift-origin] with ensure => "absent"

Failure/Error:

if (facts[:os]['family'] == 'RedHat') && (facts[:os]['release']['major'] == '6')

context 'disable openshift selinux policy' do

it { is_expected.to contain_selmodule('openshift-origin').with_ensure('absent') }

it { is_expected.to contain_selmodule('openshift').with_ensure('absent') }

end

failed: rspec: ./spec/classes/profile/base__linux_spec.rb:162: Evaluation Error: Error while evaluating a Resource Statement, Unknown resource type: 'cron' (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/os_patching/manifests/init.pp, line: 113, column: 3) on node build

profile::base::linux on redhat-6-x86_64 when managing OS patching should contain Class[os_patching]

Failure/Error:

end

it { is_expected.to contain_class('os_patching') }

if (facts[:os]['family'] == 'RedHat') && (facts[:os]['release']['major'] == '7')

it { is_expected.to contain_package('yum-utils') }

failed: rspec: ./spec/classes/profile/base__linux_spec.rb:18: error during compilation: Evaluation Error: Unknown variable: '::sshdsakey'. (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/ssh/manifests/hostkeys.pp, line: 12, column: 6) on node build

profile::base::linux on redhat-7-x86_64 with defaults for all parameters should compile into a catalogue without dependency cycles

Failure/Error:

context 'with defaults for all parameters' do

it { is_expected.to compile.with_all_deps }

it { is_expected.to create_class('profile::base::linux') }

Whoa. Not cool. From 728 working tests to 700 failures is quite the explosion! And they blew up on missing resource types that are built-in to Puppet. What happened? Puppet 6, that’s what! However…

Fix Puppet 5 Tests First

When I ran pdk convert, it updated my metadata.json to specify it supported Puppet versions 4.7.0 through 6.x because I was missing any existing requirements section. The PDK defaults to using the latest Puppet version your metadata supports. Whoops! It’s okay, we can test against Puppet 5, too. I recommend that we get our existing tests working with the version of Puppet we wrote them for, just to get back to a known good state. We don’t want to be troubleshooting too many changes at once.

There are two ways to specify the version to use. There’s the CLI envvar PDK_PUPPET_VERSION that accepts a simple number like 5 or 6, which is preferred for automated systems like CI/CD, rather than humans. You can also use --puppet-version or --pe-version to set an exact version. I’m an old curmudgeon, so I’m using the non-preferred envvar setting today, but Puppet recommends using the actual program arguments! Regardless of how you specify the version, the PDK changes not just the Puppet version, but which version of Ruby it uses:

$ PDK_PUPPET_VERSION='5' pdk test unit

pdk (INFO): Using Ruby 2.4.4

pdk (INFO): Using Puppet 5.5.6

[✔] Preparing to run the unit tests.

[✖] Running unit tests.

Evaluated 782 tests in 171.447295254 seconds: 519 failures, 0 pending.

failed: rspec: ./spec/classes/msswiki/init_spec.rb:24: error during compilation: Evaluation Error: Error while evaluating a Function Call, You must provide a hash of packages for wiki implementation. (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/msswiki/manifests/init.pp, line: 109, column: 5) on node build

msswiki on redhat-6-x86_64 when using default params should compile into a catalogue without dependency cycles

Failure/Error:

end

it { is_expected.to compile.with_all_deps }

it { is_expected.to create_class('msswiki') }

failed: rspec: ./spec/classes/profile/apache_spec.rb:31: Evaluation Error: Error while evaluating a Resource Statement, Evaluation Error: Empty string title at 0. Title strings must have a length greater than zero. (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/concat/manifests/setup.pp, line: 59, column: 10) (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/apache/manifests/init.pp, line: 244) on node build

profile::apache on redhat-7-x86_64 with additional listening ports should contain Firewall[100 Inbound apache listening ports] with dport => [80, 443, 8088]

Failure/Error:

end

it {

is_expected.to contain_firewall('100 Inbound apache listening ports').with(dport: [80, 443, 8088])

}

failed: rspec: ./spec/classes/profile/base__linux_spec.rb:12: Evaluation Error: Unknown variable: '::sshecdsakey'. (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/ssh/manifests/hostkeys.pp, line: 36, column: 6) on node build

profile::base::linux on redhat-6-x86_64 disable openshift selinux policy should contain Selmodule[openshift-origin] with ensure => "absent"

Failure/Error:

if (facts[:os]['family'] == 'RedHat') && (facts[:os]['release']['major'] == '6')

context 'disable openshift selinux policy' do

it { is_expected.to contain_selmodule('openshift-origin').with_ensure('absent') }

it { is_expected.to contain_selmodule('openshift').with_ensure('absent') }

end

Some of us may be lucky to make it through without errors here, but I assume most of us encounter at least a few failures, like I did – “only” 519 compared to 700 before. Don’t worry, we can fix this! To help us focus a bit, we can run tests on individual spec files using pdk bundle exec rspec <filename> (remembering to specify PDK_PUPPET_VERSION or to export the variable). Everyone has different problems here, but there are some common failures, such as missing custom facts:

69) profile::base::linux on redhat-7-x86_64 when managing OS patching should contain Package[yum-utils]

Failure/Error: include ::ssh::hostkeys

Puppet::PreformattedError:

Evaluation Error: Unknown variable: '::sshdsakey'. (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/ssh/manifests/hostkeys.pp, line: 12, column: 6) on node build

Nate McCurdy commented that instead of calling rspec directly, you can pass a comma-separated list of files with --tests, e.g. pdk test unit --tests path/to/spec/file.rb,path/to/spec/file2.rb

I defined my custom facts in spec/spec_helper.rb. That has definitely changed. Here’s part of the diff from running pdk convert:

$ git diff origin/production spec/spec_helper.rb

diff --git a/spec/spec_helper.rb b/spec/spec_helper.rb

index de3e7e6..5e721b7 100644

--- a/spec/spec_helper.rb

+++ b/spec/spec_helper.rb

@@ -1,45 +1,44 @@

require 'puppetlabs_spec_helper/module_spec_helper'

require 'rspec-puppet-facts'

-add_custom_fact :concat_basedir, '/dne'

-add_custom_fact :is_pe, true

-add_custom_fact :root_home, '/root'

-add_custom_fact :pe_server_version, '2016.4.0'

-add_custom_fact :selinux, true

-add_custom_fact :selinux_config_mode, 'enforcing'

-add_custom_fact :sshdsakey, ''

-add_custom_fact :sshecdsakey, ''

-add_custom_fact :sshed25519key, ''

-add_custom_fact :pe_version, ''

-add_custom_fact :sudoversion, '1.8.6p3'

-add_custom_fact :selinux_agent_vardir, '/var/lib/puppet'

-

+include RspecPuppetFacts

+

+default_facts = {

+ puppetversion: Puppet.version,

+ facterversion: Facter.version,

+}

+default_facts_path = File.expand_path(File.join(File.dirname(__FILE__), 'default_facts.yml'))

+default_module_facts_path = File.expand_path(File.join(File.dirname(__FILE__), 'default_module_facts.yml'))

Instead of modifying spec/spec_helper.rb, facts should go in spec/default_facts.yml and spec/default_module_facts.yml. As the former is modified by pdk update, it is easier to maintain the later. Review the diff of spec/spec_helper.rb and spec/default_facts.yml (if we have the latter) for our previous custom facts and their values. When a test is failing for a missing fact, we can add it to spec/default_module_facts.yml in the format factname: “factvalue”.

1) profile::base::linux on redhat-6-x86_64 disable openshift selinux policy should contain Selmodule[openshift-origin] with ensure => "absent"

Failure/Error: include concat::setup

Puppet::PreformattedError:

Evaluation Error: Error while evaluating a Resource Statement, Evaluation Error: Empty string title at 0. Title strings must have a length greater than zero. (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/concat/manifests/setup.pp, line: 59, column: 10) (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/ssh/manifests/server/config.pp, line: 12) on node build

This is related to an older version of puppetlabs/concat (v1.2.5). The latest is v5.1.0. After updating my Puppetfile and .fixtures.yml with the new version, I ran pdk bundle exec rake spec_prep to update the test fixtures, and this is resolved.

2) profile::base::linux on redhat-6-x86_64 domain_join is true should contain Class[profile::domain_join]

Failure/Error: include domain_join

Puppet::PreformattedError:

Evaluation Error: Error while evaluating a Function Call, Class[Domain_join]:

expects a value for parameter 'domain_fqdn'

expects a value for parameter 'domain_shortname'

expects a value for parameter 'ad_dns'

expects a value for parameter 'register_account'

expects a value for parameter 'register_password' (file: /home/rnelson0/puppet/controlrepo/spec/fixtures/modules/profile/manifests/domain_join.pp, line: 8, column: 3) on node build

In this case, the mandatory parameters for an included class were not provided. The let (:params) block of our rspec contexts only allows us to set parameters for the current class. We have been pulling these parameters from hiera instead. This data should be coming from spec/fixtures/hieradata/default.yml, however, hiera lookup settings were also removed in spec/spec_helper.rb, breaking the existing hiera configuratioN:

RSpec.configure do |c|

- c.hiera_config = File.expand_path(File.join(__FILE__, '../fixtures/hiera.yaml'))

- default_facts = {

- puppetversion: Puppet.version,

- facterversion: Facter.version

- }

There is no replacement setting provided. The PDK was designed with hiera use in mind, so add this line (replacing the bold filename if yours is stored elsewhere) to .sync.yml and run pdk update. Your hiera config should start working again:

spec/spec_helper.rb:

hiera_config: 'spec/fixtures/hiera.yaml'

These are just some common issues with a PDK conversion, but we may have others to resolve. We just need to keep iterating until we get through everything.

Things are looking good! But we are just done with the Puppet 5 rodeo. Before we move on to Puppet 6, now is a good time to make sure syntax validation works, and if you need to make changes to syntax, you then run the Puppet 5 tests that you know should work. Get that all settled before moving on.

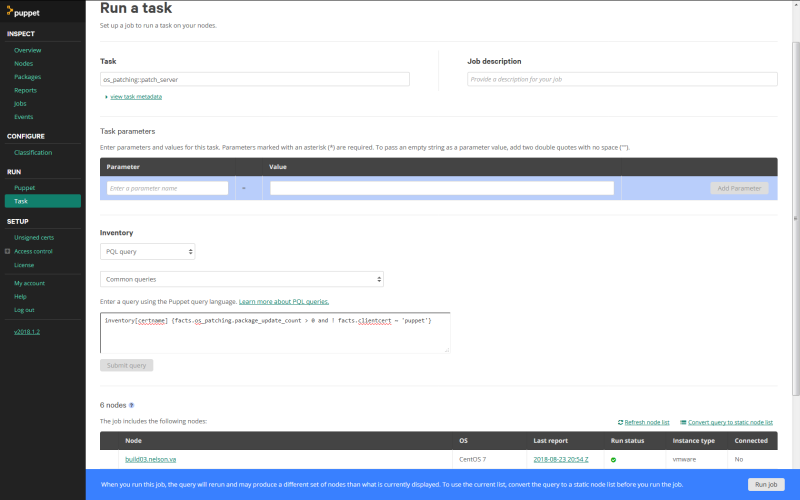

Puppet 6 Unit Tests

There’s one big change to be aware of before we even run unit tests against Puppet 6. To make updating core types easier, without requiring a brand new release of Puppet, a number of types were moved into dedicated modules. This means that for Puppet 6 testing, we need to update our Puppetfile and .fixtures.yml (though the puppet agent all-in-one package packages these modules, we do not went our tests relying on an installed puppet agent). When we update these files, we need to make sure we ONLY deploy these core modules on Puppet 6, not Puppet 5- both for the master and testing – or we will encounter issues with Puppet 5. The Puppetfile is actually ruby code, so we can check the version before loading the modules (see note below), and .fixtures.yml accepts a puppet_version parameter to modules. We can click on each module name here to get the link for the replacement module. We do not have to add all of the modules, just the ones we use, but including the ones we are likely to use or have other modules depend on can reduce friction. The changes will look like this:

# Puppetfile

require 'puppet'

# as of puppet 6 they have removed several core modules into seperate modules

if Puppet.version =~ /^6\.\d+\.\d+/

mod 'puppetlabs-augeas_core', '1.0.3'

mod 'puppetlabs-cron_core', '1.0.0'

mod 'puppetlabs-host_core', '1.0.1'

mod 'puppetlabs-mount_core', '1.0.2'

mod 'puppetlabs-sshkeys_core', '1.0.1'

mod 'puppetlabs-yumrepo_core', '1.0.1'

end

# .fixtures.yml

fixtures:

forge_modules:

augeas_core:

repo: "puppetlabs/augeas_core"

ref: "1.0.3"

puppet_version: ">= 6.0.0"

cron_core:

repo: "puppetlabs/cron_core"

ref: "1.0.0"

puppet_version: ">= 6.0.0"

host_core:

repo: "puppetlabs/host_core"

ref: "1.0.1"

puppet_version: ">= 6.0.0"

mount_core:

repo: "puppetlabs/mount_core"

ref: "1.0.2"

puppet_version: ">= 6.0.0"

scheduled_task:

repo: "puppetlabs/scheduled_task"

ref: "1.0.0"

puppet_version: ">= 6.0.0"

selinux_core:

repo: "puppetlabs/selinux_core"

ref: "1.0.1"

puppet_version: ">= 6.0.0"

sshkeys_core:

repo: "puppetlabs/sshkeys_core"

ref: "1.0.1"

puppet_version: ">= 6.0.0"

yumrepo_core:

repo: "puppetlabs/yumrepo_core"

ref: "1.0.1"

puppet_version: ">= 6.0.0"

Note: Having performed an actual upgrade to Puppet 6 now, I do NOT recommend adding the modules to the Puppetfile after all, unless you are specifying a newer version of the modules than is provided with the version of puppet-agent you are using, or you are not using the AIO versions, and ONLY if you have no Puppet 5 agents. Puppet 5 agents connecting to a Puppet 6 master will pluginsync these modules and throw errors instead of applying a catalog. If you do have multiple compile masters, you could conceivably keep a few running Puppet 5 and only have Puppet 5 agents connect to it, but that seems like a really specific and potentially problematic scenario, so in general, I repeat, I do NOT recommend adding the core modules to the Puppetfile. They must be placed in the .fixtures.yml file for testing, though.

Now give pdk test unit a try and see how it behaves. All the missing types will be back, so any errors we see now should be related to actual failures, or some other edge case I did not experience.

Note: I experienced the error Error: Evaluation Error: Error while evaluating a Function Call, undefined local variable or method `created' for Puppet::Pops::Loader::RubyLegacyFunctionInstantiator:Class when running my Puppet 6 tests immediately after working on the Puppet 5 tests. Nothing I found online could resolve this and when I returned to it later, it worked fine. I could not replicate the error, so I am unsure of what caused it. If you run into that error, I suggest starting a new session and running git clean -ffdx to remove unmanaged files, so that you start with a clean environment.

Updating CI/CD Integrations

Once both pdk validate and pdk test unit complete without error, we need to update the automated checks our CI/CD system uses. We all use different systems, but thankfully the PDK has many of us covered. For those who use Travis CI, Appveyor, or Gitlab, there are pre-populated .travis.yml, .appveyor.yml, and .gitlab-ci.yml files, respectively. For those of us who use Jenkins, we have two options: 1) Copy one of these CI settings and integrate them into our build process (traditional or pipeline) or 2) apply profile::pdk to the Jenkins node and use the PDK for tests. Let’s look at the first option, basing it off the Travis CI config:

before_install:

- bundle -v

- rm -f Gemfile.lock

- gem update --system

- gem --version

- bundle -v

script:

- 'bundle exec rake $CHECK'

bundler_args: --without system_tests

rvm:

- 2.5.0

env:

global:

- BEAKER_PUPPET_COLLECTION=puppet6 PUPPET_GEM_VERSION="~> 6.0"

matrix:

fast_finish: true

include:

-

env: CHECK="syntax lint metadata_lint check:symlinks check:git_ignore check:dot_underscore check:test_file rubocop"

-

env: CHECK=parallel_spec

-

env: PUPPET_GEM_VERSION="~> 5.0" CHECK=parallel_spec

rvm: 2.4.4

-

env: PUPPET_GEM_VERSION="~> 4.0" CHECK=parallel_spec

rvm: 2.1.9

We have to merge this into something useful for Jenkins. I am unfamiliar with Pipelines myself (I know, it’s the future, but I have $reasons!), but I have built a Jenkins server with RVM installed and configured it with a freestyle job. Here’s the current job:

#!/bin/bash

[[ -s /usr/local/rvm/scripts/rvm ]] && source /usr/local/rvm/scripts/rvm

# Use the correct ruby

rvm use 2.1.9

git clean -ffdx

bundle install --path vendor --without system_tests

bundle exec rake test

The new before_install section cleans up the local directory, equivalent to git clean -ffdx, but it also spits out some version information and runs gem update. These are optional, and the latter is only helpful if you have your gems cached elsewhere (the git clean will wipe the updated gems otherwise, wasting time). The bundler_args are already part of the bundle install command. The rvm version varies by puppet version, that will need tweaked. The test command is now bundle exec rake $CHECK, with a variety of checks added in the matrix section. test used to do everything in the first 2 matrix sections; parallel_spec just runs multiple tests at once instead of in serial which can be faster. The 3rd and 4th matrix sections are for older puppet versions. We can put this together into multiple jobs, into a single job that tests multiple versions of Puppet, or into a single job testing just one Puppet version. Here’s what a Jenkins job would look like that tests Puppet 6 and 5:

#!/bin/bash

[[ -s /usr/local/rvm/scripts/rvm ]] && source /usr/local/rvm/scripts/rvm

# Puppet 6

export BEAKER_PUPPET_COLLECTION=puppet6

export PUPPET_GEM_VERSION="~> 6.0"

rvm use 2.5.0

bundle -v

git clean -ffdx

# Comment the next line if you do not have gems cached outside the job workspace

gem update --system

gem --version

bundle -v

bundle install --path vendor --without system_tests

bundle exec rake syntax lint metadata_lint check:symlinks check:git_ignore check:dot_underscore check:test_file rubocop

bundle exec rake parallel_spec

# Puppet 5

rvm use 2.4.4

export PUPPET_GEM_VERSION="~> 5.0"

bundle exec rake parallel_spec

This creates parity with the pre-defined CI tests for other services.

The other option is adding profile::pdk to the Jenkins server, probably through the role, and use the PDK to run tests. That Jenkins freestyle job looks a lot simpler:

#!/bin/bash

PDK=/usr/local/bin/pdk

echo -n "PDK Version: "

$PDK --version

# Puppet 6

git clean -ffdx

$PDK validate

$PDK test unit

# Puppet 5

git clean -ffdx

PDK_PUPPET_VERSION=5 $PDK test unit

This is much simpler, and it should not need updated until removing Puppet 5 or adding Puppet 7 when it is released, whereas the RVM version in the bundle-version may need tweaked throughout the Puppet 6 lifecycle as Ruby versions change. However, the tests aren’t exactly the same. Currently, pdk validate does not run the rake target check:git_ignore, and possibly other check: tasks. In my opinion, as the pdk improves, the benefit of only having to update the PDK package version and not the git-based automation outweighs the single missing check and the maintenance of RVM on a Jenkins server. And for those of us using Travis CI/Appveyor/Gitlab-CI, it definitely makes sense to stick with the provided test setup as it requires almost no maintenance.

I used this earlier without explaining it, but the PDK also provides the ability to run pdk bundle, similar to the native bundle but using the vendored ruby and gems provided by the PDK. We can run individual tests like pdk bundle exec rake check:git_ignore, or install the PDK and modify the “bundle” Jenkins job to recreate the bundler setup using the PDK and not have to worry about RVM at all. I’ll leave that last as an exercise for the user, though.

We must be sure to review our entire Puppet pull request process and see what other integrations need updated, and of course we must update documentation for our colleagues. Remember, documentation is part of “done”, so we cannot say we are done until we update it.

Finally, with all updates in place, submit a Pull Request for your controlrepo changes. This Pull Request must go through the new process, not just to verify that it passes, but to identify any configuration steps you missed or did not document properly.

Summary

Today, we looked at converting our controlrepo to use the Puppet Development Kit for testing instead of bundler-based testing. It required lots of changes to our controlrepos, many of which the PDK handled for us via autocorrect; others involved manual updates. We reviewed a variety of changes required for CI/CD integrations such as Travis CI or Jenkins. We reviewed the specifics of our setup that others don’t share so we had a working setup top to bottom, and we updated our documentation so all of our colleagues can make use of the new setup. Finally, we opened a PR with our changes to validate the new configuration.

By using the PDK, we have leveraged the hard work of many Puppet employees and Puppet users alike who provide a combination of rigorously vetted sets of working dependencies and beneficial practices, and we can continue to improve those benefits by simply updating our version of the PDK and templates in the future. This is a drastic reduction in the mental load we all have to carry to keep up with Puppet best practices, an especially significant burden on those responsible for Puppet in their CI/CD sysytems. I would like to thank everyone involved with the PDK, including David Schmitt, Lindsey Smith, Tim Sharpe, Bryan Jen, Jean Bond, and the many other contributors inside and outside Puppet who made the PDK possible.