Over the 12 days of #Commitmas, you may have submitted a Pull Request (PR) to Matt Brender’s 12-days-of-commitmas repository. I’ve done the same thing. Once the PR is merged, your fork is out of sync with the main repository. If you do more development on your fork, you may start to run into conflicts when you submit the next PR.

Adding additional remotes

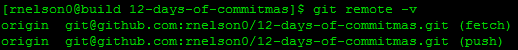

When you set up your fork, github (bitbucket, etc.) helpfully provides you with the repository’s remote information in HTTPS or SSH format (see Jonathan Frappier’s article on forking for more information). After you clone your fork’s repo, you’ll have a single remote called origin. You can see your remotes with git remote -v.

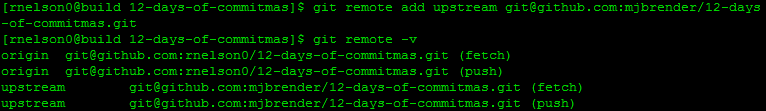

We can handle PRs through the github interface, but that doesn’t help us keep things in sync (well, you could delete your fork and make a new one, but that’s a lot of work and wipes out any pending changes you’ve made, so we’ll consider that unfeasible). The best way to manage this is to add the original repo as another remote. The remote can be called anything you want. We will call it upstream, a common pattern with github users.

As you may remember, you provide the keyword origin to your push statement. That’s not a magic word, it’s referring to the remote to which you are pushing your commit history to. Most of the time you don’t have rights to push to someone else’s repo, but git push upstream <branch> is a legitimate git command. You can also use the upstream remote with other commands.

Fetch and Pull

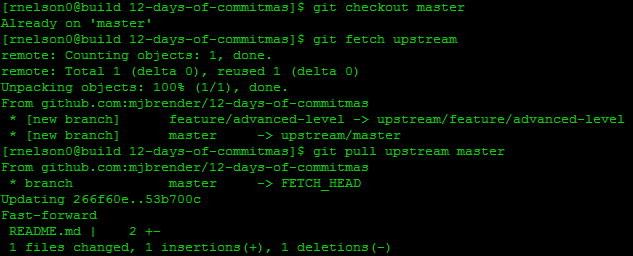

You can fetch the latest information from the remove with git fetch upstream. This will pull down all references and branch names. You can then git pull upstream master to fast-forward apply all changes in the upstream’s master branch to your current branch. I made sure to make sure I’m in my own master branch before I began, but you could do this in any branch – great if you started some edits based off master and a few PRs have been applied since.

Once you’re done, don’t forget to push changes to YOUR remote. You don’t technically need to do it, but if you work on another machine, you’d have to perform the whole clone/remote add/fetch/pull process again.

Now you’re able to see all the changes made in the repo and you’re able to base new branches off the up-to-date contents of your master branch!